|

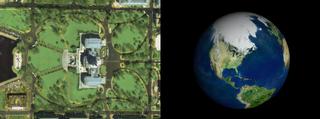

The zoom would start with a tight view of the US Capitol using an NTSC-video resolution sub-image (720 by 486 pixels) from the IKONOS image. The point of view would move up, revealing more and more of the IKONOS image until the edges of the image were reached. The IKONOS image is about 10,000 pixels wide, so the effective resolution of the image on an NTSC screen would be 10,000 meters/720 pixels, or 14 meters per pixel. This is about the resolution of the Landsat image, so the transition to the Landsat image could be made at this point. Since each image had 15 to 17 times the resolution of the previous image in the sequence, appropriate data would be available for each transition all the way to a global view. The curvature of the Earth affects this calculation of resolution, but, since the Earth curves away from the viewer, less resolution is needed when the curvature becomes significant. Figure 2: The beginning and end of the zoom sequence As the animation scene was constructed, the following significant issues were encountered:

|

Goddard Space Flight Center coordinates a major release of information about Earth science research efforts on Earth Day every year. It was decided that zooms to major US cities would form the linchpin of the 2001 release [7], providing a local focus point for news organizations to discuss broader stories of Earth science research and remote sensing technology. This release prompted the development of a process for creating the zooms that led through the Earth Day release to several major event releases, including both the Super Bowl and the Olympics. 3 THE REGISTRATION SHADER The critical element of our process that needed improvement was texture registration -- the precise placement and layering of imagery onto a three-dimensional model of the globe. Without precise texture registration, the different satellite image layers could have visible seams and other artifacts. The software that we used for the initial zoom did not allow for accurate texture placement on arbitrary spherical segments. Efficient access to textures at runtime was also necessary. The rendering software we used for the initial zoom loaded all textures into memory before rendering. Since we were dealing with very large textures, we needed software that loaded the texture pieces on-demand. These requirements led us to look for rendering software that provides programmatic control over the renderer. Pixar's RenderMan software provides this control through Shading Language [8], allowing for the creation of procedural shaders. A procedural shader is a small program that is called every time a ray hits an object, and returns the color and opacity of that object. Shading Language also has a very efficient texture lookup function that reads MIP-mapped, tiled image files on-demand. We developed a simple, elegant solution to our texture registration problem: a single registration shader that encapsulated the precise placement, layering, and blending of all four data sets using parameters that specified the longitude/latitude extents of each data set. The registration shader was applied to simple sphere geometry, then rendered. The registration shader is passed four images with associated longitude/latitude extents. The images include embedded alpha channels used to smoothly blend between layers. When the shader is called at a particular point, the longitude and latitude are determined based on the (u,v) surface coordinates at that point. Texture calls are made for each layer which return the color and opacity for that layer. The colors are composited with the global image on the bottom and the 250-meter, 15-meter, and 1-meter images layered above in order. The single registration shader, although elegant, had a serious problem. When the camera position was close enough to the sphere to be at the final zoomed in location (e.g., the US Capitol), there were numerous artifacts. We determined that we had run out of (u,v) surface coordinate precision, and the coordinates being passed to the shader were not accurate enough for texture registration. The surface coordinate precision problem was solved by placing a small patch in the exact position where the IKONOS data should be located. This gave the renderer a separate (u,v) coordinate space to correctly map the imagery. A simplified version of the registration shader was applied to this patch. This solution works because the curvature of the sphere in the very small region of the IKONOS patch has negligible curvature and the patch can be treated as a flat rectangle. |